Alive And Well: Modern Data Warehousing With Philip Russom, Tyler Owen, And Chris Gladwin

Remember when Hadoop was predicted to replace the data warehouse? How’d that work out for Hadoop? Data Warehousing is doing just fine, and has evolved in a variety of customer-friendly ways in the last few years. It can also play nice with data science and data lakehouses, as well as modern data pipelines and other interesting analytics architectures. There are even hyperscale data warehouses these days!

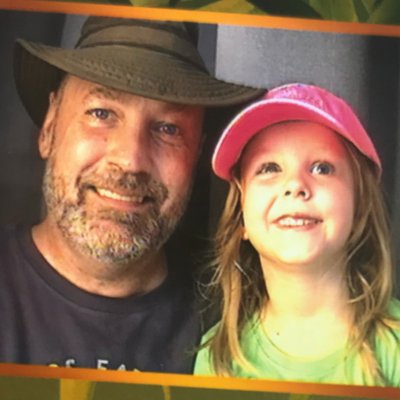

Find out more on this episode of DM Radio, as Host Eric Kavanagh interviews veteran analyst Philip Russom, along with Tyler Owen of Teradata, and Chris Gladwin of Ocient.

—

Transcript

[00:00:42] Eric: I’m very excited to have an all-star lineup of some of my old pals in the line and some new friends as well. We’re going to talk about modern data warehousing alive and well. I’ve been in this business for a long time. I’ve been studying data warehousing for over twenty years. I’m embarrassed to say how long it has been. A lot has changed but a lot of things have stayed the same there.

We have Philip Russom who is now on his own. I’m very excited to hear that. Most recently of Gartner, I knew him at the TDWI days, The Data Warehousing Institute as it is called. We also have Chris Gladwin from Ocient, a very interesting company. It’s a hyperscale data warehouse. They have been building this for a while now. It’s very cool stuff. Tyler Owen is with us as well from Teradata, the 800-pound gorilla of the analytics space. They have lots of friends and so many databases.

Think about a cloud data warehouse. You’ve got Amazon Redshift and Google with BigQuery. You have Teradata out there. You have Snowflake, the big 800-pound gorilla of the cloud. They have been around for a while now too, doing some interesting things. Let’s get some introductory comments maybe from each of our guests here. Philip Russom, I’ll throw it over to you first. For our broad audience out there, we’re in New York, San Francisco, and a whole bunch of bunch different markets out there. Data warehousing has been around for a while but it’s very much alive and well, and Hadoop is not.

[00:02:06] Philip: That’s true. It’s like that old phrase, “I wish I had a nickel for every time somebody said the warehouse was going away.” They don’t go away. Part of it is because the data warehouses do continue to evolve. They evolve so that they can do two things. Number one, continue to be relevant for the business that they serve because that’s what a data warehouse does. It tries to bring together the data that can be modeled in such a way that the data represents the whole enterprise.

If you want to do reporting and analytics where you’re looking at the whole enterprise, the data warehouse is the platform. It’s the data architecture, a special design that helps you pull data together for that broad view. Data warehouses don’t just gather a lot of data. They tend to improve it and model it very carefully so that you have high performance with your queries so that you can very easily recalculate 20,000 metrics overnight and then feed them into 7,000 management dashboards.

Warehouses tend to help with large-scale business intelligence-type stuff. We’re all going into new forms of analytics. The data warehouse is evolving to not just be the premier source for reporting dashboards, OLAP, and some other stuff. It now has to be the premier source for certain types of analytics that the warehouse originally was not designed for.

We have massive amounts of data brought in for data science and a variety of advanced analytics. What most organizations want is predictive analytics. To get predictive analytics, you have to do a lot of background work in machine learning and artificial intelligence. Those are very different data requirements for the warehouse but the warehouse is stepping up and supporting those broad use cases as well.

[00:03:46] Eric: That’s good stuff. I’ll bring in for some opening statements here Chris Gladwin first from Ocient, a company, not too many folks have heard about yet. Hopefully, we’re going to change that on this show because these folks have a very ambitious worldview. They have spent a number of years. Version 19 is his first GA.

That shows you that they wanted to get it right but when you’re building for hyperscale, you have to look at every single component and make sure it’s architected properly. Philip made a good point. Architecture is important. We will talk later about schema-on-read and stuff like this that we have come up with in the last few years. Architecture is crucially important, especially if you want to build something big and tall. Tell us about what you’ve done, Chris.

[00:04:29] Chris: I would build on what Phillip was saying. You have to design not only at the application level but at the data warehouse engine level for the use cases you are focused on. One size does not fit all. If you think about what a pickup truck is good at, that’s very different than what a freight train is good at. Those are different use cases. If you design for one, you’re going to be great at that. You’re not necessarily going to be able to do everything.

That’s one of the big changes. With the scale of data growing so much, you can’t be pretty good. You have to be amazing at that functionality to deliver on it. There’s a big difference. Let’s say separate compute and storage. There are advantages to that or combining compute and storage, which is what we do into one tier. There are advantages to that. It’s important to know the use cases that you’re focused on and optimize for that.

[00:05:19] Eric: We talked about Tesla on a show and how Elon Musk didn’t make some incremental changes to car design. They thought through the entire end-to-end manufacturing process and redesigned something amazing. I don’t know if it’s still the case but I saw a graphic that showed the market cap for Tesla versus the market cap for every other com car manufacturer in the world combined. They’re equal, meaning the market cap for Tesla is equal to the market cap of every other car manufacturer in the world combined. That’s because they thought through the whole process and reinvented all the wheels. What do you think, Chris?

[00:05:58] Chris: We had the same challenge. The reason we started the company is many years ago, the largest data analyzing organizations in the world were the ones that asked us to see if we could solve this problem of limitless scale analysis, not in terms of how much data you store but how much data you analyze. Our focus is on use cases where the average query is going to touch a trillion or more records or half a petabyte or a petabyte more of data, not stored but analyzed to return that query.

To deliver at that level, we had to build from the ground up a whole new architecture or engine for that. There’s a lot of value. You’re seeing a lot of companies doing amazing things by separating compute and storage over a network. We do exactly the opposite. We focus exclusively on use cases where thousands of times a day or a minute, these giant queries are going to come in. They have to touch a trillion things each. We want compute and storage together.

When we run benchmarks, what you see are clusters where they’re separating compute and storage. That’s the architecture. They will get tens of gigabits per second or bandwidth between those two in terms of what you see in the performance. In a typical small cluster for us, we get 6,720 gigabits per second or bandwidth between our compute and storage tiers because they’re separated, not by a network but by multiple parallel PCI lanes.

If we do our job as software designers, we’re going to go at least ten times faster because we have so much more bandwidth. You also see a huge difference in the number of parallel threads. We designed our architecture in a time when you had high-core count CPUs and NVMe solid-state drives to make all that stuff go as fast as it can go. You could have a million parallel tasks in flight on a system and do that 10,000 times a second. That’s a remarkably different architecture. That’s great at hyperscale and only does hyperscale.

[00:08:02] Eric: I learned from our mutual buddy, a guy named Mark Madsen, the critical importance of design point and of knowing what you’re trying to build and understanding the environment around you. One of the challenges and exciting components I would suppose of software design in the modern era is that everything is changing pretty quickly. The hardware changes. The chips change. How you put systems together changes. You have to have this long view of where you want to go and be very methodical and patient in getting there. It sounds to me like that’s exactly what you’ve done, Chris.

[00:08:37] Chris: I would assert that the changes you’ve seen in hardware, at the end of the day, is what’s going to drive your capabilities and performance that you can get up in your software and data warehouse. The changes we saw over the last few years were the biggest changes in computing. Before, it was bigger, faster, and cheaper. For the first time, it was new. High-core count CPUs with that many cores and parallel were new. That was a very different thing. It wasn’t just faster.

Modern Data Warehousing: The changes you’ve seen in hardware, at the end of the day, is what’s going to drive your capabilities and performance that you can get up in your software and data warehouse.

As you compare NVMe drives to spinning discs for 50 years, the performance that you could get out of a database at scale was limited by how fast a spinning disc could do random reads and writes. That is driven by a physical phenomenon. How fast is the platter spinning? How fast does the read/write head move? That was 500 a second for the past 50 years. On Moore’s law as a basis, it was getting slower.

On the other hand, instead of 500 random 4k reads a second, an NVMe drive will give you a million. It’s not a little bit faster. The other difference is instead of wanting a sequential pattern of reads and writes, which is what a spinning disc wants, an NVMe solid-state drive wants a random pattern. You want to feed it not one task at a time. You want to feed it 500 going on 1,000 and 200 tasks at a time. It’s fundamentally different.

[00:09:55] Eric: I’ll bring Philip back in to comment on that because this is fascinating. You have to have this long view in our business if you’re going to design something. All the kids say now, “If you’re going to skate where the puck is going to be, they never sense the sounds. The Sharks got good.” Everyone is using hockey metaphors. Even though they don’t know anything about hockey, they’re using hockey metaphors. I’m in Pittsburgh. We know all about hockey.

This is very interesting that they had this big vision. They went out and had to address all these little component parts along the way to figure out how to do it. That’s why it takes nineteen versions to get GA but if you’re going to build the next pyramid, you have to be strategic about it. What do you think about all these moving parts and where the industry is going versus where Ocient is going?

[00:10:40] Philip: It’s a big challenge. Here in Boston, we’re all Bruins fans. There are so many parts in our environment for data and analytics. When you stretch it out beyond the database parts and go into engines of tools for reporting and all kinds of crazy analytics and data science, the list is daunting. It’s scary. This is why user organizations will have teams that have a lot of different specialties to them. They parcel that out.

We’re rethinking data engineering and thinking about how we have people who are all data engineers but some of them will be specialists in data integration or pipelining others as specialists in data quality and so forth. This is the way a lot of user organizations handle the complexity of this thing. A lot of vendors have looked at this. If you look at a lot of the recently-built data platforms, they’re very service-oriented. They’re based around web services. That’s built into the name of Amazon Web Services.

That’s one way to come at it from a vendor’s point of view. I see some users being able to think in those terms as well and work with an environment where functionality is potentially a plague of services. Sometimes it’s a challenge because you’ve got so many services. It’s hard to know which five you need for the use case you’re working on. Some organizations, especially if the data people have more of an applications development background, are used to thinking in those terms but otherwise, finding the functionality in some of these giant systems can be challenging.

[00:12:11] Eric: Chris, I’ll throw this one back over to you. We will get Tyler Owen from Teradata into the next segment here but Chris, I’ll throw this one over to you. I have learned because I’m in this space and I have a voracious appetite. I’m a very curious person. When you can deliver a query the size that you’re talking about at the speed you’re talking about, that changes the rhythm of an organization and how people work every day, which is a big deal. Talk to us about that angle and how it is that you are able to get people excited. go ahead.

[00:12:40] Chris: Usually, there’s a disruptive change in technology. We all have been a part of dozens of them, so we have seen them happen. Eighty-plus percent of the ultimate uses of that disruptive change are new uses. One of the challenges of new uses is it’s very difficult for an organization to implement something so different. If what you’re creating is 50% faster or 30% faster, that’s easy. Drop it in. It fits in with what it did. If you put in something that’s 10 times or 100 times the price performance of what was there before, often that’s new functionality.

Usually when there's a disruptive change in technology, 80% plus of the ultimate uses of that disruptive change are new uses. Share on XIf it’s existing functionality, it’s going to break other things that it’s next to. Those other things are not used to it, “Don’t hit me that fast.” It ends up being much more difficult for organizations to do. What you see at a lot of enterprises is they have teams that specialize in these kinds of transformational uses like an innovation team or a new product introduction team because it’s hard to do. Doing something that is so much better, faster, bigger, and cheaper is much more difficult to implement, particularly for IT professionals. It’s something that’s an incremental change.

[00:13:50] Eric: That’s a good point. I think to myself about my old days of doing print production. There was a prepress company that we would work with. They went out and bought all the new Macs because they wanted to be able to do good stuff but even still, you would you want to do a particular layout or run a function and an algorithm on one big graphic. If it’s a big enough graphic, they would hit the enter button, go home for the weekend, come home, and have it be done. That’s a very strange cycle time to be on. That’s not good for analytics for darn sure.

In the analysis space, that’s why you want to have that interactivity and be able to ask a question because it’s usually the 2nd, 3rd, and 4th questions that result in the answer that you were looking for. What was happening in the old days when it was too slow is people didn’t go down that road. If it takes too long for the query to come back, you’re going to do something else and go with gut instinct. Philip, what do you think? Changing the pace of an organization and an individual’s working day is what we’re talking about doing. If it’s too disruptive, that does cost some issues. What do you think?

[00:14:55] Philip: Those are all valid points. I would point to some use cases around data warehousing that have to have those perky queries that we all wish for. One of them is the rise of self-service data access. Self-service can take many different forms but there are typically a few steps that go into a self-service session. First of all, quite often, it’s a fairly non-technical user.

It’s typically a business person who knows enough about the relational paradigm to be dangerous as they say, and because of that, they’re able to go in, browse data, search data, and create some straightforward queries with data. The other steps in self-service depend on that time where they’re exploring data quite often depending heavily on query technologies. If the query takes more than a minute, they all have a limit to their intention spans. They will get distracted and that sort of thing. Self-service is one example.

Quick queries are one of the requirements. I could go on all day. There are a lot of requirements for self-service. You can’t throw an easy tool over the wall and expect the users to figure it out. There are a lot of requirements but one of them is you’ve got to have the perky queries to make this work. It’s not that business person doing that self-service. We also see a lot of data exploration with people like data scientists. When they start a project, they start with that query of dependency.

[00:16:17] Eric: That’s a very good point. Our studio audience has got a couple of good questions coming in. You can be in the studio audience too. Send me an email at [email protected]. We have been doing this for a long time. Philip was there in the earliest days. You were in our first year, Philip Russom. We’re talking to Philip, Chris Gladman of Ocient, and Tyler Owen waiting very patiently in the wings from a company called Teradata. Tyler, tell us a bit about yourself and what you’re doing in the world of modern data warehousing.

[00:18:14] Tyler: Eric, thank you for having me on. I appreciate it. I’m happy to be here. First of all, fifteen years isn’t a long time. Teradata has been around a lot longer than that. We were the original Born to Be Parallel database. We have managed to grow and change quite a bit. I have watched a lot of technologies come and go. Some of the ones that are going on are interesting. We are bringing several new pieces to market to it. It’s very exciting but the whole market space as you were talking about earlier is evolving dramatically. It’s a fun place to watch and a fun place to work.

[00:18:44] Eric: Teradata has done some pretty interesting things. You have Teradata Vantage in the cloud. There was a good quote from someone who was in our live studio audience here. Maybe I’ll throw this over to you. Someone writes, “I see cloud as a hosting option versus an internal company hosting option. I worked at GM in the late 2000s. Most IT capability was hosted in many external locations like IBM, Capgemini, HP, etc. How do you view all that? How are things changing?”

[00:19:13] Tyler: Winner, winner chicken dinner. That perception is 100% accurate. We have one software solution, Vantage, but it covers multiple deployment architectures. This goes right to what Philip was saying earlier about the importance of looking at different architectures for different use cases. We still have a lot of customers who have solutions that are on the old proprietary hardware that we still sell in partnership with Dell.

Modern Data Warehousing: It’s very important to look at different architectures for different use cases.

We have a lot of customers in the Far East who are running VMware in their data centers. That’s still an important platform. We have some customers who said, “We are all about AWS.” Others were all about Azure. Others were all about Google. You have to be able to have not only a hybrid where you’re on-prem and on-cloud but you would also be able to do multi-cloud across multiple pieces.

Teradata was born to be parallel and was good at managing the scarcity of resources within a confined box but as you open up to what you would think would be limitless hardware options in the cloud, how do you start to take advantage of those? As Philip mentioned earlier, the separation of compute and storage is a big part of being able to leverage different types of storage options. Teradata has done that. We’ve got a couple of different deployment options and architectures in the cloud now, which are differentiating and setting the trend.

[00:20:31] Eric: Let’s dive into some of that. As I look at, for example, data science, there are powerful use cases around data science that are overkill for some traditional behind-reporting use cases. The end-user has all of these different options that they can go with. There are all sorts of embedded analytics solutions you can do to bring it right into your workspace. That’s a very clever move.

Teradata has been doing that or enabling that for years. You do have this cloud data warehousing. Chris Gladwin talked about separating compute and storage, which is great in certain use cases when you want to be able to do certain things but it’s not always the way to go. We have another show that we do on Thursdays called SoftWare in Motion. A guy named Chris Sachs talks about how sometimes you don’t want to do that. Sometimes you have a very purpose-built product to tackle certain particular needs of your business. You have to know which those are and what is right for you.

Data science is not going to be right for many small businesses. They need reporting and some analytic capability. The real key here is to know what you need as your organization, and then work with a trusted advisor to help you figure out where to go because the cost can go through the roof if you have the wrong technology for the wrong use case. What do you think?

[00:21:48] Tyler: You have tight SLAs, predictable workloads, and BO reporting. That’s traditional Teradata. That’s what we have known and has been our bread and butter for many years. It’s still an important part, particularly when you guys mentioned customer 360. That view of the customer or that type of activity can often fit into those workloads but when you started to get into the data science piece, you’ve got even business departments.

Let’s say you’ve got three guys in a marketing department that want to do some segmentation analysis or a couple of people in finance who want to do some comparisons and pull in some data sources and generic data sets. That’s when you’re starting to get into more of that exploratory work. You’re way better off having those folks in their sandbox, having their compute clusters.

You can start them and stop them when you want. They can run them on S3 as opposed to more expensive options on AWS. You can separate the workloads and then put financial operations controls on top of that to make sure that the cost doesn’t run wild because that’s the other thing that happens in the cloud. Everybody is excited about the cloud being cheaper but if you don’t control it properly, it isn’t always that way.

[00:22:55] Eric: One of the funniest lines I heard is that the most ungoverned line in your IT budget is Amazon Web Services. You wake up one morning to a heart attack. I’ve had it happen in a small microcosmic way where you forget that something was running. You go out for lunch or dinner. You didn’t turn it off. I’ve done things where I’ll do test marketing on stuff. I’m going to pause the campaign, we’re talking about ad tech, but if I forget to pause the campaign, it will keep running and spend all my money. I didn’t want that to happen. It’s a fun use case. I’ll get your thoughts on this.

I’m trying to play around with these engines to understand how to get the best quote on these different ad packages you want to buy. If you tell them it’s a big budget, that’s probably a metric they’re watching. They will also watch and see, “He always says it’s a big budget and then he stops it halfway through.” I’m sure that can be noticed after a while but these are all these interesting little dynamics that you can weave into an analytic process, which is how you make better decisions for your business.

[00:23:54] Tyler: The best way to go about it these days is with a single pane of glass that allows you to look at your environment, rapidly be able to spin it up, add users, and create administrators. It’s something that is easy for the business to move at the speed of business, yet you still need to be able to have those financial controls to say, “Even if they leave it running, turn it off at 5:00 so it doesn’t burn the budget up and run all night long.” You need to have those abilities. You need to be able to scale smartly. In other words, let’s not set it to where we get five concurrent users. Let’s scale to another compute cluster. Do it smartly. Let’s wait until we get up to 90% utilization on it before we move over. Those types of things are great.

[00:24:24] Eric: We should talk about concurrency too because there are a lot of very powerful data science technologies not so good at concurrent users and when 1,000 or 2,000 analysts are hacking away at the data and trying to understand something. Maybe go into the logic behind how you build a solution that is good for high concurrency.

[00:24:55] Tyler: It depends on exactly the use cases as you’ve defined it. What we have found is that you’ve set it up so that it can scale. Maybe it’s a small environment. You want to set it to scale 0 to 2. Maybe it’s something you know there’s going to be a lot higher concurrency on. Set it to 0 to 50 type of thing. You need to have an environment with that degree of flexibility.

You mentioned the data scientist earlier and their needs being very different from a regular business user’s. You need to have an open ecosystem when it relates to these types of things and be able to bring their models and tools into the environment and run them. The young folks who are in data science and data engineering are coming out. They’ve got r\n\ in Python. Those are the tools they’re comfortable with. You need to be able to provide a platform for them to be able to leverage that and then scale it concurrently as you talked about.

[00:25:44] Eric: Maybe we will go around the room and get some thoughts on that. Concurrency is a big issue. Sometimes you only have five people who are going to be doing deep queries. You don’t need a whole bunch of concurrency. Chris, I’ll throw it over to you. Sometimes you have 2,000 to 5,000 analysts who want to be hitting a data set. If they’re all crashing, you’ve got a big problem on your hands.

[00:26:03] Chris: We were talking earlier about how things come around and come back around in IT in cycles. A lot of what we’re talking about in the cloud and concurrency are patterns that people are very familiar with from the mainframe. One of the things we found is there’s a lot of real decades-old expertise in workload management. If you want to deliver concurrent workloads at hyperscale, this is not something you pick up on a weekend.

The expertise it takes to take these giant concurrent queries is coming at you from every direction many times a second. That was stuff that is not necessarily in our hyperscale but was dealt with in the past. We have had a lot of success in bringing in talent from a company that has a lot of expertise in mainframe development that have decades of experience in how to handle these issues.

It’s something that is a differentiator when you get into these real-world environments. You can’t take each workload and say, “It’s a concurrency of one. I have to build a wholly separate system,” which had been the case we saw a lot in ad tech. What customers want is to have multiple workloads and high-level concurrency all running on the same system. That’s hard to create. It takes a lot of work.

[00:27:16] Eric: Philip, I’ll bring you back in. I mentioned earlier this whole schema-on-read concept. You said earlier the importance of architecture. Architecture changes. I’ll give one random example here about the importance of thinking through the architecture of a system you’re going to scale out. That’s the difference between Zoom and WebEx.

The Zoom people figured something out from the WebEx folks that was fantastic because, in WebEx, you would launch your webinar. You create a webinar. It has a distinct URL. When the webinar is done, that URL is dead. There’s a new URL for the archive version. In Zoom, they were very clever. They said, “We will do it on the same URL. That way, if you show up late, you can watch the archive from the same URL.” What an idea.

It’s important to think about the use case and what the users are going to be doing with things. That goes into the decision. There’s this schema-on-read stuff. We always had to think through the data warehouse design to optimize the queries people are going to make. They’re like, “Forget all that. We want to do discovery and come up with stuff on the fly.” That sounds good but schema-on-read turns out to be hard to pull off. What are your thoughts about all that, Philip?

[00:28:23] Philip: That’s a lot of points to consider but if we narrow it down to the schema-on-read issue, there are certain things we have wanted for decades. We were able to build tools that could do it even in the ’90s but the tools didn’t work very well and typically had horrible performance to the point that people didn’t have the patience and attention span to use them.

Constructing data sets on the fly is part of that. I tried the first data virtualization tools in 1995. I was blown away by them. They were wonderful. The performance tanked to the point I wouldn’t use them. For a lot of stuff we dreamt about in the past, we now have systems that are so damn fast, scalable with volumes, and good at precaching. They’re very intelligently watching users. The tools can figure out themselves what to precache.

Everything has gotten so much better that schema-on-read can be pulled off now with pretty reasonable performance. You have to set expectations properly. Don’t tell people, “You’re going to have a sub-second.” You’re going to be lucky to get a sub-minute but that’s still better than the old-school thing where you would have to wait a week for some technical guy to get the results set for you.

Schema-on-read, building data sets on the fly, data virtualization, subsets of that data federation, and all of those are extremely useful in a data warehouse and analytics environment. They have all gotten so much more realistic in terms of performance we can bear that they have all come to a whole new plateau of usability.

[00:29:56] Eric: I’ll bring in Tyler Owen again too. The other fun part about this industry is that there are so many more people getting involved. We talked about this years ago. BI for the masses is what we talked about. Now, it’s here. That’s one reason why Snowflake did so well because we got wandered into the woods with the Hadoop movement. A lot of reverse engineering was required to make that stuff work. It turns out data warehousing works pretty darn well for basic business use cases. There are so many more people getting into the game. That’s good news for everyone.

[00:30:29] Tyler: Going to Phillip’s point, with Vantage, for example, if you want to explore a new data set, native object stores are pervasive across all three of the cloud service providers. You want to explore these data sets. You need to be able to do some schema discovery and have it in such a way that the tool will be able to provide you with an auto schema. If that doesn’t work, providing exception handling can help you sort through it. Those tools are becoming pervasive and very helpful.

Whether it’s people interfacing directly with our toolset or with tools that are plugged in on top of us, we’re finding more that we have to play well with others. We have a lot of folks who, for example, are big fans of things like AWS SageMaker. They want to be able to connect Vantage with AWS SageMaker and have it seem like a view of a table. We have enabled that type of integration back and forth. We’re trying to make it seamless for that end-user or those business folks as they’re trying to get the answers they’re looking for.

[00:31:29] Eric: Chris, do you want to chime in real quick?

[00:31:31] Chris: I would even take it up a level, whereas it used to be the data analysis and data warehouse work supporting the business. For more businesses, it is the business. It doesn’t matter if you’re making food, transportation, or logistics. Your business is becoming data.

It doesn't matter if you're making food or transportations or logistics or whatever, your business is becoming data. Share on X[00:31:48] Eric: It’s the analysis of that data. You look at the supply chain disruptions that we had first from tariffs and then from COVID. That’s a big deal. That’s a very tough nut to crack in terms of analytics. The supply chain has lots of different moving parts, things, dependencies, and so forth, which is a good material if you have the right technology to scan through and do the discovery. Maybe we will talk about that in our final segment. That’s my favorite part of the discovery. It’s what Tyler was talking about. It’s looking at a data set and trying to find some general shape or pattern in there to be able to leverage. That’s a process and it’s a human process usually. Folks, we will be right back.

—

[00:33:41] Eric: We’re back here for the final roundtable segment. What a great show. We’ve got Philip Russom, now an independent analyst. Call him if you want to understand data warehousing or anything in the data space. He’s very good at all that stuff. We’ve got Chris Gladwin from a company called Ocient, a relatively new company with a powerful hyperscale data warehouse, I love that stuff, and Tyler Owen from Teradata, which has been doing this stuff for years. I love this new product announcement that you have, Tyler.

First, I want to talk quickly about architecture and the difference in architecture between a data warehouse, which is a relational database, and a data lake, which is a very different thing. The data lakehouse came along as a way to bridge those worlds. I view it as a situation where they all have some value if the question of what you need is for your business. Philip, what do you think about the architectural differences between warehouses, data lakes, and this new lakehouse concept?

[00:34:34] Philip: As background, I want to remind everybody that there’s always architecture. There are sometimes seventeen of them. That’s the thing. If you look at pretty much any technology stack in IT, it’s going to bring together multiple architectures. They will stack on top of each other or they will be side by side and inevitably have some overlap. With the data lake and the data warehouse, most people do conceptualize them as being side by side. There’s a big overlap in the middle.

We have been talking about use cases and everything from traditional reporting to data science. We have been talking about it like it’s one seamless thing but in practice, it’s not. Part of the reason it’s not is that the data requirements for reporting dashboards and for more discovery-oriented machine learning, artificial intelligence, and predictive analytics, are extraordinarily different between the two.

The lake has stepped up to do things that we never designed warehouses or warehouse platforms to do. Warehouse platforms are about ruthlessly structured data with a bulletproof audit trail because you will be audited to find out, “Before I make a decision on your report, where did you get that data?” It’s that kind of question. The lake is enabling analysts and data scientists who are on more of a discovery mission. They’re trying to find the stuff we don’t know about.

They need the kind of hyperscale volumes that Chris has been talking about. That makes a data scientist salivate. You get the idea. If we look at what the warehouse and the lake do, partition according to reporting and data science. That’s a very simplistic statement on my part but it’s a generalization that helps people understand why you would need them both. They do not replace each other. They’re complementary. When you put a lake and a warehouse together, you have a much better chance of satisfying all the data requirements of all the use cases.

[00:36:24] Eric: Chris, I’ll throw this over to you. The lakehouse is great as Philip has suggested here for data science and for fueling all sorts of discovery and analysis. The warehouse is great for deterministic findings. When you want to bet your company on this new pricing model, that’s going to be coming from your warehouse most likely. What do you think?

[00:36:47] Philip: The warehouse is about tracking what we know. The lake is about discovering what we don’t know.

[00:36:51] Eric: Chris, what are your thoughts?

[00:36:52] Chris: What we see happening is the data analysis industry is in the midst of a big transition. What’s driving it are these underlying hardware trends we talked a little bit about before. The cost of storage is about $0.10 a gig on NVMe solid-state and about $0.03 a gig on a spinning disk. With techniques like the Zero Copy Reliability we have pioneered for databases at Ocient, we see those crossing over at parity over the next few years to where you will get 500 times or 5,000 times the performance at the same price on a storage basis.

Modern Data Warehousing: The industry is in the midst of a really big transition. The data analysis industry and what’s driving it are these underlying hardware trends.

The next transition that’s going to occur within that ten-year period is that the cost of data analysis between DRAM and solid-state is also going to cross over. DRAM provides more analysis per dollar measured in random 4k reads per second, which is at the end of the day, the thing that drives performance. That’s going to cross over in about five years where solid-state is going to become less expensive than DRAM.

As those two transitions occur down at the hardware layer, we expect to see databases migrate to an architecture where both compute and storage live on solid-state. The only reason why you have a separate tier for storage from your compute tier is that it’s currently less expensive. At Ocient, we’re able to add storage at the cost of S3 but this is high-performance NVMe storage.

As that becomes more pervasive in the industry, it’s going to change architectures to where if the cost of your data lake storage was the same as the performance that you get out of in-memory, it’s going to collapse architectures into a single tier. We think we’re at the beginning of about a ten-year transition that we’re going to see driven by those performance and price trends.

[00:38:37] Eric: That’s very interesting. I remember Dr. Laura once did a presentation where he created a pyramid and talked about how all change starts at the hardware level. It moves up a lot of times the operating system, the applications, and so forth but when that hardware level changes, that’s a big deal. You think about chip design.

That’s another ten-year process, meaning with what you’re going to be designing in the next couple of years, you’re thinking about 3 or 4 years after that, which is the amount of time it takes. That’s collapsing a little bit but it’s not collapsing too much because it takes time to wrap your head around these things and then rearchitect systems as you folks have learned.

I’ll throw it over to Tyler for some closing comments here. What I love about Vantage and this new direction you’re taking is the appreciation of a very federated world. If you think about it in data warehousing, what do we do? We wanted to locate all the data in one place such that we can run some analyses. We had to build all those cubes to make it faster but now, the world is much more federated.

It’s funny because the data lake took the exact idea, “Let’s put all our data in the same place.” I was like, “Are you sure that’s going to be the right way to do it because it might get slow and hard to find?” We made that same mistake again. Maybe with this lakehouse concept, we’re solving both of those problems. What do you think? Tell the broader audience about this new announcement. Go ahead, Tyler.

[00:40:02] Tyler: What we feel a lot is what we hear a lot in the market is that our customers and prospects are after price performance. That’s the measurement, “How much performance am I getting? What’s the query performance like?” We have been able to blow those statistics away with our results primarily because as we have talked about these different architectures, we’re able to leverage them.

If we want to run something at a sub-second response time and it’s a mission-critical workload, not only can we protect it and isolate different pieces with it but we can put it on the storage that we feel is most appropriate. We have those options. In the announcement that you made, what we have done is taken Teradata’s expertise and heritage and combined it with the next-generation architecture as another deployment option. Philip talked about those architectures and the separation of compute and storage.

We have taken that to a whole other level where we have taken our ability to workload management and all those pieces around it and applied it to the cloud and the ability to separate compute and storage, yet still build in the intelligence. We have gotten into primary and compute clusters’ ability to isolate workloads. We built it all in a single pane of glass so that our customers are able to manage it much easier and be able to utilize that in an effective way to get to the business answers.

It’s very different data sources. If we’re talking about a traditional and trying to get business reporting out of structured data, we can run it off what we consider traditional Teradata but if that data scientist, that data engineer, or even the business person who’s trying to get those business answers wants to get to the heart of their analytics and what that means, we have introduced a whole new concept called ClearScape Analytics.

That is an end-to-end analytics process where we provide the tools and the open ability to bring your tools if you would like from the beginning of where you’re doing data wrangling and analysis and trying to explore some of those object stores as we talked about, whether you’re at the end of the process, trying to model that data and then operationalize it. We’ve now got a complete suite of tools put together to help get to those analytic challenges, which are differentiating for us in the market.

[00:42:01] Eric: Folks, look all these gentlemen up online. We’ve got Tyler Owen from Teradata, Chris Gladwin from Ocient, and Dr. Phillip Russom. We will have him on the show again. I do want to point out that the last conference I went to before the whole pandemic thing hit was the Teradata conference out in Denver. I remember looking at a lot of the vertically-oriented solutions.

It’s funny because here in this industry, we’re all geeking out on the speeds, the feeds, the hardware, and the technical side of things. Business people mostly don’t care about that stuff. They want to be able to analyze what’s happening in their organization and get some situational awareness. I was very impressed with the vertical solutions that Teradata had on the floor there at the conference because they were focused on the particular use case.

That’s important because retail is going to be a whole lot different from finance, which is going to be a little bit different from insurance, which is going to be a lot different from healthcare. Think about the number of factors that come into play. When it’s healthcare, you’re talking about life sciences. You have millions of data points that are crossing over and can affect each other. If you’re talking about pharmaceutical design, for example, you’ve got to do some pretty deep queries.

That’s real hardcore data science. You’re going to have to give some data science platform to excel in the pharmaceutical industry these days but the options are there. The solutions are everywhere. There are all kinds of great ways you can do this. You can hop online. Wikipedia even has lots of great definitions of things. Data warehousing is alive and well. It’s not going anywhere. Hadoop, not so much. We will talk to you next time though. Thanks to our guests.

—

[00:43:35] Eric: Ladies and gentlemen, welcome back once again. This is the five-minute drill. We have Philip Russom, my good buddy of many years now, and five key activities for modernizing a data warehouse. Philip, give us the five-minute drill. Take it away.

[00:43:51] Philip: Eric, it’s good to see you again. Thanks for having me on the program. I pulled together five keys to building a modern data warehouse. There are other keys as well but these are some of the higher priorities. With the data warehouses and a lot of solutions you build, you want to think about what are we going to do with this thing. With the data warehouse, it’s important to think about what use cases will be supported by my modern data warehouse.

Is it going to be the old stuff, the traditional reports, and the dashboards? Are you going to try and do the new stuff as well like data science and advanced forms of analytics like machine learning and artificial intelligence? There are also a variety of recently-emerged use cases for the warehouse like self-service, data sharing, and data archiving. A variety of things like marketplaces and so forth quite often do get housed in the warehouse.

Some people put their customer 360 there. You need to have a good list of use cases before you start designing the warehouse because the data requirements and the business requirements of all these many use cases will determine many of the design features and the architectural features of your warehouse and will certainly influence what platforms you choose to go with it.

Speaking of platforms, data warehouses as we modernize them have been going on regularly for about fifteen years. There’s this phenomenon called re-platforming. For years, people have been migrating warehouses from one platform to another. Years ago, before cloud became common, we would re-platform from one on-premises platform to another. Commonly, I saw a lot of people leave Oracle and try Teradata, or they would go down market and try SQL Server.

There’s a lot of that going on for years but now, there’s still re-platforming going on as people rethink what their warehouse has to do for the business. Cloud is so prominent that most re-platforming does involve moving to a cloud platform. Some people already have a favorite platform on premises like Teradata. They will move to Teradata Vantage on cloud. We see a lot of Oracle users sticking with Oracle products but going to the cloud versions and so forth. There are a lot of brand-new products that have been built to be cloud-native from the bottom up.

We do see people trying a variety of platforms and re-platforming. Usually, we’re going to cloud as the target for this re-platforming, but there are other platform choices going on here. This is a very rich time. My most recent employer was Gartner. I worked on a magic quadrant where we looked at 40 different database management systems. That was our shortlist. We had to get it down to 20 because Gartner wouldn’t let you cover more than 20 vendors in one magic quadrant.

This is a very rich time for the database management system. You’ve got a lot of stuff to consider. You have to think about what’s your cloud commitment. Do you need more of a hybrid data warehouse? That’s certainly the case with many people because you can put some warehouse data sets on premises and some on cloud. Remember Hadoop. Hadoop is gone but we still have some Hadoop habits like working with data and file systems instead of a DBMS. That works out for a lot of data science, not necessarily other analytics but you get the idea.

You’ve got a lot of choices to consider. Likewise, you have a lot of architectural variations. I worked at TDWI for years. Our definition of the warehouse is it’s a data architecture. You populate it with data. The data is very important. Metadata is important but it’s architecture. It’s not those platforms that I was talking about. It’s the data and the designs you come up with for its architecture.

All the stuff I’ve talked about so far does influence your designs at the local level for data models and also at the macro level for your architecture. We have a lot of architectural change going on. Many of it is driven by cloud. Migrating to cloud is an opportunity to reset a lot of your designs and habits. I see people resetting their team structures for the warehouse and analytics. I also see people adapting new methodologies as they migrate to cloud like data ops and other approaches to data engineering.

Architecture and that other stuff tend to be recreated as we go to cloud because you don’t want to just move to cloud. You want to improve things as you go there. Storage is very different on cloud. You need to study storage before you start designing for cloud. That’s one of the big stumbling blocks. If people fail to understand the different aspects of storage and cloud, that’s where failures come from.

Finally, a lot of data modeling is still with us. We still have a lot of dimensional data in the warehouse but OLAP is gone. It has been replaced by star and snowflake. A 360-degree view of the customer is driving us towards flat and wide data models. With so much file-based data coming on, whatever those files have is influencing our modeling.

[00:48:39] Eric: Here we go. Those are great five-minute drills.

Important Links

- Philip Russom

- Ocient

- Teradata

- Amazon Redshift

- BigQuery

- Snowflake

- Hadoop

- [email protected]

- Vantage

- SoftWare in Motion

- S3

- Python

- SageMaker

- Oracle

- SQL Server

About Eric Kavanagh

Host of #DMRadio & #InsideAnalysis! On-Air in New York, Boston, San Francisco, Chicago, Portland, Salt Lake City, Los Angeles, Atlanta, DC… Former@UN & @SXSW

Host of #DMRadio & #InsideAnalysis! On-Air in New York, Boston, San Francisco, Chicago, Portland, Salt Lake City, Los Angeles, Atlanta, DC… Former@UN & @SXSW

About Philip Russom

Philip Russom is a well-known figure in data management, data warehousing (DW), data integration (DI), big data, and analytics, having worked as an industry analyst for 25 years, producing over 650 research reports, magazine articles, speeches, and Webinars. He covered data management for Gartner, TDWI, Forrester Research, Giga Information Group, Hurwitz Group, and his own private analyst practice. He also focused on data management as a contributing editor at Intelligent Enterprise and DM Review magazines. His current research and client interactions involve the many types of database management systems (DBMSs) that are available on cloud, on premises, and in hybrid or distributed architectures, with a focus on DBMS use cases in data warehousing, data lakes, data lakehouses, and analytics.

Philip Russom is a well-known figure in data management, data warehousing (DW), data integration (DI), big data, and analytics, having worked as an industry analyst for 25 years, producing over 650 research reports, magazine articles, speeches, and Webinars. He covered data management for Gartner, TDWI, Forrester Research, Giga Information Group, Hurwitz Group, and his own private analyst practice. He also focused on data management as a contributing editor at Intelligent Enterprise and DM Review magazines. His current research and client interactions involve the many types of database management systems (DBMSs) that are available on cloud, on premises, and in hybrid or distributed architectures, with a focus on DBMS use cases in data warehousing, data lakes, data lakehouses, and analytics.

About Tyler Owen

Product Marketing visionary and goal-driven business strategist. Leads storytellers building positioning and messaging for deeply technical products via cross-organizational collaboration.

Product Marketing visionary and goal-driven business strategist. Leads storytellers building positioning and messaging for deeply technical products via cross-organizational collaboration.

Excels in developing and delivery of Product Marketing for multiple product initiatives while running a Product Marketing Office. Leads all Product Launch activities, coordinating efforts and resources across the organization.

Proven record in driving change by leading cross-functional teams of 100+ and creating a collaborative environment that fosters creativity, innovation, and learning to advance product/technology strategy, and messaging to support global customer services/sales.

About Chris Gladwin

Chris Gladwin is an American inventor, computer engineer and technology entrepreneur, who has founded or co-founded a series of tech-related companies including MusicNow, Cleversafe and Ocient

Chris Gladwin is an American inventor, computer engineer and technology entrepreneur, who has founded or co-founded a series of tech-related companies including MusicNow, Cleversafe and Ocient

Philip Russom Tyler Owen And Chris Gladwin join host Eric Kavanagh to discuss modern data warehousing and how it plays with data science and other analytics architectures.